How to Build a Super Collective Intelligence

When 1 + 1 = 42

How to Build a Super Collective Intelligence

When 1 + 1 = 42

DISCLAMER: This is the Sh*tTY first draft

This is the embarrassing, shitty, unedited, unreviewed, draft of the first few chapters intentionally released as is. Why am I releasing it as is? I don’t know, to learn perhaps… I’m continuing to actually build and test out what I’m writing about here. This may make writing a moot point, but I find it helpful to write things down and structure my thoughts.

Humans, barely intelligent

Humans, barely intelligent

Source: www.gifbay.com/gif/stupid-12

Source: https://giphy.com/search/stupid-people

Source: https://giphy.com/search/stupid-people

We may think that the greatest challenge to humanity today is something like Superbugs, Climate change, inequality, nuclear proliferation, synthetic biology, artificial intelligence, millennials, or a large number of other things that keep us awake at night. But what is the common theme behind these, is there an underlying cause? When we think of global challenges, most of them do have a common cause, humans. Climate change is caused by unsustainable human activity, inequality is fostered by greed and imbalance of power, and millennials, of which I am one, well it's obvious, they are the result of a failed lab experiment crossing a cheeseburger with a Nokia 6210. Now sarcasm aside, If we were to somehow solve these challenges, I.E the symptomes, but not the underlying cause, will New challenges not just pop up in their place? Can we imagine a solution to the underlying cause?

What is the underlying cause here? One possible cause could be that we simply don't have the cognitive capacity and intelligence to think holistically, taking into account the hugely complex systems we live in. A solution to one problem is often the cause of multiple others. If we consider human intelligence, compared to other intelligences, then we have just about the very minimum intelligence anything could have to recognize it is intelligent, but we may be nowhere near the intelligence we need to actually think intelligently. Consider a baby who has just learned to walk, suddenly they are filled with confidence and overestimate their skills. They think they can walk anywhere, cross a busy street, walk into a swimming pool, or straight down a flight of stairs. A baby's confidence in their skill of walking is nowhere near the reality of their skill level. This is a well known cognitive bias and applies to humans of all ages, known as the Dunning-Kruger effect which states that after you know the basics, the less you know, the less you are able to understand how incompetent you actually are, and therefore, ironically, the more confident you are that you know more than you actually do.

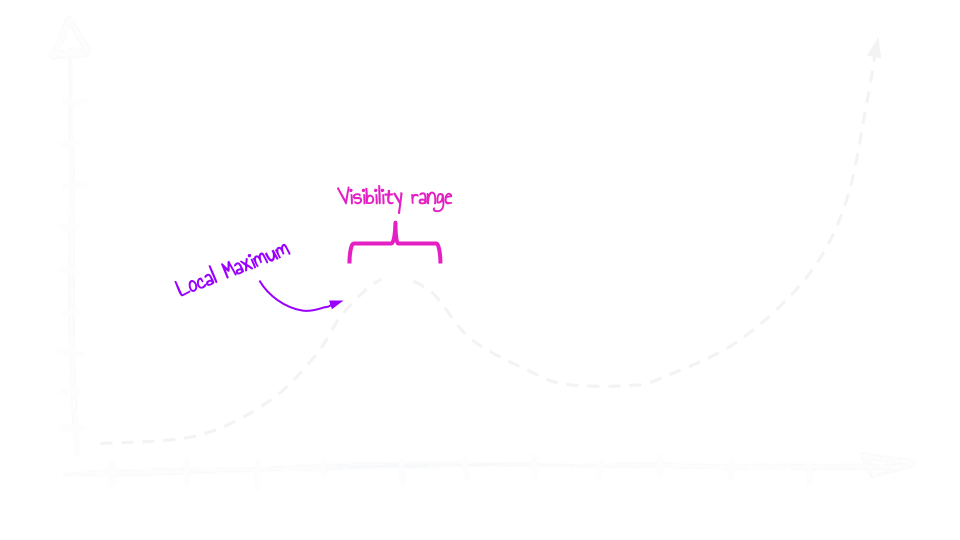

To illustrate this point, I made this chart below

Is there a way we can increase our intelligence, our maturity, our empathy, our wizdom? And I'm not talking about linear growth and a marginal increase of 5% 10% or even 20% higher. For these types of increases our educational systems are good enough, but still bring us nowhere close to where we need to be. I’m talking about a 5x 10x or 200x improvement that is required for us to cross the intelligence requirement gap.

When 1 + 1 = 42

An ant colony is orders of magnitude more intelligent than a singular ant, a brain is orders of magnitude more powerful than a single neuron, bacteria which on its own can survive only for a few minutes in harsh environments, however, can thrive and grow exponentially in the same harsh environment when networked together with millions of other bacteria that openly share genetic information. It is the argument of this paper that it is our highly networked and somewhat open structure that has enabled us to get this far, and that we can rapidly accelerate progress, become more intelligent, more compassionate, more mature, more conscientious, and solve our grand global challenges that currently seem so complex, by embracing this. In this paper I’d like to describe why this is important, what this means and how it could be achieved, in the hope that together we can build an awesome tomorrow.

Oh, and I’m also recording this as an audio/video podcast, because personally I battle to read, my dyslexia turns even the shortest post into a marathon read. Therefore in the hope to encourage others to also create audio version of what they write, i'm doing it here too.

Accounting for my own bias

Before reading further, it will be useful to understand a little about my background as it will help you to know what biases may be distorting my own perceptions of reality and therefore the assumptions and conclusions I write up here.

The work I’ll be describing here started from the flames and rubble of many failed projects and businesses I tried to start in my early career. It gradually coalesced into a coherent project with the birth of my first child and a desire to help my kids become self-directed learners, willing and able to tackle crazy, awesome challenges.

Soon after my daughter was born I started Dev4X, a company I started as a side project, that would focus on developing audacious projects that on the face of it would have little chance of success, but if they did would change the world for the better. The assumption was that there were many people in the world who wanted to do something more meaningful than the bullshit jobs they were spending most of their time on. If we could somehow tap into their wasted talents, for just a few hours a week, to do something meaningful, some modular small task that when stacked together with many thousands of other modular small tasks, that together we could create something amazing. If done openly, then even if a project failed -which was the expectation from the start- then most of the work we put into it would not be wasted, the modularity of the tasks that were completed could then be applied to a different projects (A detailed description of how this is done is described later in the paper). I started with various projects that focused on empowering kids, with the first large project trying to help kids in refugee camps, disaster areas and rural areas who don't have access to school, to help them take their learning into their own hands. We called this the Moonshot Education Project, and here we focused on building a GPS-like learning map, that could help these kids see where they are at on a map of everything they can learn and empower them to self-navigate to where they want to go, learning what they need, when they need it. Now suddenly their passion to become a Nurse or bicycle mechanic translated into a personal path of learning that was relevant to their goals. This approach allowed us to leverage their innate personal motivations as opposed to relying on external motivators like teachers, and parents, which were not around. A few years later, while working with various universities, NGO’s and UNESCO I started to explore the same challenge but from a different angle, empowering kids to tackle real-world problems and to tie those goals to the things they should learn, we called this the Open Social Innovation project. Here kids would be empowered to build solutions to their local challenges, like building a water purifier, a solar electric power supply or an electric wheelchair. Here each project would be broken down into many small modules, and all modules shared openly, so that others trying to solve similar challenges would not need to start form step one, but could leverage the work others have already done.

Then, suddenly life happened, and all I was doing became really personal. My 5-year-old daughter suddenly became paralyzed over the span of a few hours, due to a rare illness. During those first few days in the hospital, after finding out there were no treatments we could afford, and only a 2% chance of recovery, we decided to use this as an opportunity and put to practice the work I had been doing over the past few years. We decided to build a solution to help her regain the use of her paralyzed limbs.

First, using the learning map method from the moonshot education project, we mapped out where we were and where we wanted to get to, which showed us all the things we needed to learn. Like 3d printing, electronics, signal processing and machine learning. Then we used the Open Social Innovation method, to start building and learning as we went, reaching out to strangers from around the world to share their expertise and advice.

Every day we worked to solve her challenge, and soon we were working with hundreds of experts from around the world. We mapped out all we needed to learn, and applied Open Social Innovation to build all we needed to build. A year later, leveraging the collective intelligence of strangers from around the world, she and I built a brain-controlled exoskeleton, 100X cheaper, 10X lighter, and one that enabled her motor neurons to repair themselves. Her story is really inspiring, but more so, it was a powerful validation of what I've been working on. Which is to radically increase innovation, by accelerating learning and doing within a highly modular, open network.

This brief snapshot of relevant parts of my background will help inform you where I am coming from for the rest of the post, including what my biases are, and the perspective that shape my vision.

Creating The Context.

Humanity has for many thousands of years already started building a collective intelligence, but before we jump into it, in this section, I’d like to accomplish two main goals: 1. Provide context for the remainder of the paper and 2. Use this context generating process as a tangible example of how super collective intelligence can be generated.

Let’s start with context: This is really important, because a lot of what I want to discuss here needs to be viewed from a Macro, global perspective, and not through our individual local contexts we live in. Local contexts vary considerably, and local solutions in one context may actually harm efforts in other contexts. As an example, as I write this, New York City where I live is going into lock down due to the coronavirus. It's an important local solution, but unfortunately something that is harming efforts to contain the virus in other localities as some New Yorkers, move to other locations not in lock down. The same is happening in South Africa where I grew up and in India, where I now spend a lot of time working. There, due to the lock down, migrant workers who are no longer able to find work in the city, are returning to their villages, and there, the result may be catastrophic. One local solution is creating challenges elsewhere. For this reason, I want us to take a step back, way back, let's choose to put to one side our local contexts, like our political structures, our economic structures, our existing local solutions. Let's boil the challenge down to a point where it cannot be distilled any further, and I want to try and do this by discussing the context.

Humans have developed a complex world built on centuries of ideas, stacked on top of each other like bricks, many of which we now simply take for granted. And while many of these idea bricks are powerful and have been vetted rigorously, on occasion some crack or are no longer fit for purpose, but could be hidden from sight as so many other bricks are stacked on top of them. While I will mention how some of these bricks are cracked or how they are unfit, I want to start far below them, I want to start with a new foundation, on top of which those cracked broken bricks may not even be required.

Context modularity

When discussing ideas that require us to come back to first principles and build them from the ground up, we need to painstakingly build a new shared understanding. Consider the trouble Tim Berners-Lee, the inventor of the World Wide Web had when he first started to pitch the World Wide Web. At that point there was no mainstream shared understanding, no context that could be leveraged to help him create an easily understood 30 second elevator pitch for the public. His 30 second pitch was: “I want to take the hypertext idea and connect it to the TCP and DNS ideas” … Mmmm ok, this is still confusing today, but back then in the late 80’s and early 90’s this could have as well been a different language. Tim and a vast group of collaborating engineers had to create a new shared understanding, a new way of describing things and communicating things, a new context. You can imagine them building this context over time one idea at a time, with each new idea able to be stacked together with other ideas gradually building a sophisticated shared understanding. So let's do that here, but let's do it a little differently.

Unlike traditional shared understanding, like how the earth was thought to be the center of the universe, and when we discovered it was not, became incredibly difficult to amend, replace or remove from our world-views, let’s make sure the ideas we build here ARE interchangeable, amenable and easily replaced! I’m well aware that I may be wrong on occasion, in fact, I hope I am, so that I can demonstrate how easily it is to quickly replace some brick of shared understanding with a more accurate brick. As we will discuss later on in this post, one of the key elements of a powerful collective intelligence is the ability of it to rapidly refine, reuse, and replace information. I think a reasonably good analogy is to think of ideas as Lego bricks. Bricks which should be designed to easily be stacked together, swapped out for others, recombined with yet others, localized, amended or scrapped altogether without needing to totally dismantle all you have built.

lego brick by Lluisa Iborra from the Noun Project

Lego bricks work because they have a simple modular structure, they have an input side, where the indents are designed to be able to accommodate certain other bricks, they have a shape, design and color which gives the brick its meaning and purpose. And they have the output side, where other bricks could be stacked on top.

And that’s what I want to do here first, I want to start by building the context bricks with which I’d like to discuss Super Collective Intelligence.

How the rest of the book will be structured:

The way I am writing this book and how it is structured is meant to also be a tangible example of how super collective intelligence can be generated. One idea building on-top of another until the sum is far greater than it’s parts.

We will start off describing the Context bricks,

Then the foundational bricks that can be built on top of that context,

Then the solution bricks that can be built on top of that foundation,

Then the Implementation bricks

And finally ending with the scale bricks

lego brick by Lluisa Iborra from the Noun Project

Context Bricks 1 - We are in deep shit

But Shit makes for really good compost, out of which we could grow something amazing!

Context Bricks 1 - We are in deep shit

But Shit makes for really good compost, out of which we could grow something amazing!

We are in deep shit, but this is a huge opportunity

The goal of this chapter is to define some initial bricks around the extent of the challenges ahead, and how deeply rooted, and therefore foundational they are. Deep rooted, foundational challenges require a special solution, usually one that requires a complete rethink. And this is the key point of this chapter, the idea that our current challenges cannot be solved by business as usual, in fact, I hope to show that we cannot even rely on our foundations. We need to take this all the way down to first principles.

Consider the leaning tower of Pisa. Over the two hundred years of its construction it started to lean quite early on, the foundations on the one side of the building were poorly developed and lay on softer ground. And even though they could have rebuilt the foundations when they were still early on in its development, this was considered too costly, so they instead tried to mitigate the leaning by building further layers slightly curved in the opposite direction of the lean to counter the lean of the building. In the end even this did not work and over the years modern engineers have tried to reinforce the foundations by injecting them with cement grouting and attaching cables boulted around the tower to try to hold it in place. The modern world is built much like the leaning tower of pisa, on faulty foundations. And much like the architects and builders of the tower, we have been trying to mitigate this challenge by building solutions that focus on the symptops (the lean) instead of the cause (the faulty foundations).

The world is going through tremendous challenges over the short to medium term, particularly over the next 25 years. Climate change, Job disruption, nuclear proliferation, synthetic biology, superbugs and income inequality, to name just a few. The challenges we will face, while individually, are similar to those humanity has faced many times before like automation causing job loss, now however, because we are facing them all at once and at an increasing rate, they could quickly outpace the speed at which our traditional mechanisms have been able to generate solutions. It could quickly cause our leaning tower to collapse. This seems already to have been the case for some parts of our tower. Let me expand on this using two points:

Point 1, You realize we still need to pay back our credit cards:

If you have watched TED talks, then perhaps you already know of Dr. Hans Rosling who beyond being a TED rockstar was also a profoundly compassionate humanitarian. In some of his talks, and his recent book, Factfulness, he described the world through data and showed how incredibly far we have gotten as humans and how good we have it now compared with the past. If we look at the data, it's incontrovertible that we are far better now in many important ways than ever before. People who are living in absolute poverty is diminishing rapidly, child mortality has dropped many fold since measurements began, girls are getting an education and we now have more flavors of salad dressing than there are active conflict zones on our planet. Many others share similar points, Like Dr. Peter Diamandis, author and founder of the XPRIZE, among numerous other accomplishments. He too makes a great case in his book “The future is better than you think”. A few years back when I worked with him on one of his ventures, Planetary Resources, an asteroid mining company, we spoke of how soon modern technologies could foreseeably deliver to us an abundance of anything we can think of, including gold, platinum and other rare earth metals, from asteroids. These are strong arguments, backed up with data. Today is far better than the past, and likely advances that are soon to come about will expand on this progress greatly. However, what is missing is what has fueled this progress. And how in the short to medium term we can expect to fuel continued progress, or even to simply sustain the burn rate we currently have. We need to realize we still need to pay back our credit cards. If we look at data coming out of the UN, described in more detail in point 2 below, this progress, however amazing it is, has come at a huge price, a price we have not yet paid. The progress has been funded unsustainably, by the natural resources of our planet. It's similar to how you can temporarily increase your standard of living by putting things on a credit card. For example, almost anyone reading this piece could easily increase their standard of living temporarily, by getting a few new credit cards. You could then pay for the surgery you need, the education your kids lack, and the care your parents deserve, You could buy that new car, an 8K TV, go on holiday, eat out and go to extravagant parties (well maybe not for the next few months). And in all accounts the quality of your life, and those around you would increase. But give it a few months or sometimes a few years -for those talented enough at conning their way to more credit- and things will eventually catch up to you. Yes I agree with Dr. Rosling and Diamandis, that things have gotten a lot better, and that the future could become far better than we think, but this progress has created huge dept, just like credit card debt, which will need to get paid back, with interest. One option, which I’ve heard increasingly spoken about, especially by climate change deniers, or those well off few, is to simply continue the rapid resource extraction by unregulated mega corporations and oligopolies. Allow the free market and the invisible hand to guide humanity to a more valuable short term future - valuable for the small number of shareholders that is. Then as we bankrupt earth, hope to travel the stars in search for a few replacement planets that we could move to. Let’s consider this quickly: There are many natural examples of this happening: A reptile as they first grow up in their egg uses up all the resources of that egg before they bankrupt it’s resources, break free from it and move into to a completely new environment. We could consider earth as being the egg of human civilization. But an obvious counter to that is, what about all the non-human life that also calls the earth their home, many of which we have already brought to extinction. I consider this option here, not because I believe we should take it, but because it is a valid option and one we may need to take assuming we do little to change and we don't destroy each other before this is possible. There is also the option to hope we invent some technologies that could help us start to become more sustainable, and there are numerous such technologies currently in the pipeline. From renewable energies, water desalination, vertical farming to synthetic biology. But the rate of these advancements are simply too slow. At our current pace, even taking into account these technologies, we are on track to lose as much as 30 to 50 percent of all species on the planet by 2050.

Point 2, We need to Marie Kondo the shit out of the world

If the goal is to retain the Earth, then the costs of paying back this Progress Debt, could far exceed the monetary value we have extracted from it, similar to how paying back a car on credit will cost you more in the long run than it would have had you paid for it with cash. I do get the opportunity cost argument, that sometimes it’s far more advantageous to put things on credit if the interest rates are low and the return on investment of that purchase exceeds the interest payments, but here things get scary. It’s not those that created this Progress Debt that will need to pay for it! Those that created this dept will be far gone when it's time to pay it back, and for them it was a bargain, for them the opportunity cost calculation made sense, for them the cost has been zero. They never needed to pay anything back, they effectively got to take out this progress dept at 0% interest without the requirement to even pay back the principal. But that calculation is not as favorable for our kids and future generations, they are the ones that will need to pay back this dept, assuming they want to keep the earth habitable. Let me illustrate just how much debt we now have by looking at what we have spent this debt on, and how little of that is actually providing us a net positive return on investment: A report funded by the UN conducted a multi year deep analysis of the top 100 industries, with a seemingly simple objective. Add all the REAL costs of the industry to their balance sheets and see which would still be profitable. The report showed that greater than 90% of the world’s top industries would not be profitable if they paid for the natural capital they used and damage they are doing. If you were to add their externalized costs, which are costs that companies don't pay I.E the Progress Dept, and you add that to their balance sheets, they would not be profitable. For example the forestry industry don't pay for the true cost of the forests they cut down, oil companies don't pay for the pollution and damage they create, the garment industry does not pay to remove microfibers from our water supply. Almost all of the top 100 industries are in the same place. Think about what that means. It means that almost everything we spent our credit on was spent on assets that cost us more than they provide as a return. Instead of purchasing things we needed and would have caused a net positive investment for us, we blew it on bullshit short-term extravagances. Imagine the whole world were represented by the following family:

Instead of purchasing the laser eye surgery they needed, they purchased a nose job instead

Instead of getting their kids a better education, they spent it on sending them to glorified daycares

Instead of getting their parents the care they needed, they bought them pain killers.

Instead of buying the car they needed, they bought a 6.2 liter SUV’s to do their grocery runs with.

Almost everything this family has spent their credit card money on, while temporarily increasing their standard of living, were really poor investments. None provide them any real net return, and simply compound the debt they will need to pay back.

What this means is that if we want a sustainable world, one in which our kids can still live, we need to fundamentally change how the world does almost everything it does, from the way we create toothpaste and build our homes, to the way we produce our coffee and the socks we wear. All those industries, all those factories, all those shops, and means of production will need to fundamentally change. Consider how disruptive it would be for you to change almost everything you currently do. This is the extent of change we need to aim for if we want a sustainable future. I know this sounds impossible, but bare with me for a second. Let’s forget what is possible for a second and come all the way down to first principles. Let's imagine we could redesign everything.

Re-engineering almost everything we are doing is a huge challenge but also a huge opportunity. Consider how much better we could design the world now with the experience we have gained over the past few thousand years. Disruptive innovation could not only come to replace unsustainable industries, it could provide educational and occupational opportunities that don't currently exist, outpacing the jobs being lost, and in their place create sustainable jobs, which inherently would be far more fulfilling and meaningful, than the bullshit jobs available today. Later in this post I go into detail discussing how we can go about rapidly re-engineering the world from the ground up, using a technique we see numerous natural collective intelligent systems use, like bacteria.

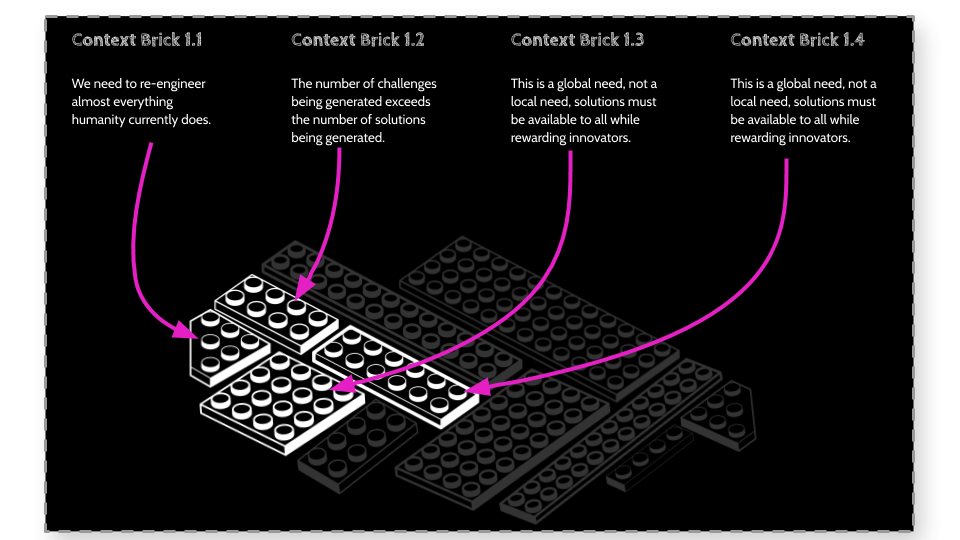

Therefore to conclude this chapter, here we described the first few context bricks we will use during our discussions. Each of these bricks will be reused and recombined with others later in the paper and to visually make this more interesting and understandable, I’ve created these bricks here:

Note that as we go, you may have disagreements with the ideas I’ve discussed. It would be useful to focus your disagreement onto one or many of these bricks, highlighting where you think the input, idea or output need to change. Jointly we could then decide if we should update, replace or possibly fork -which is essentially, creating a copy of something making some changes to it and then running both versions in parallel (A concept I’ll dive deeper into later in the paper).

[needs updating] Context Bricks 2 - Our ignorance is increasing

That's ok, but we need a framework within which to collaborate in a world of increasing divergence.

[needs updating] Context Bricks 2 - Our ignorance is increasing

That's ok, but we need a framework within which to collaborate in a world of increasing divergence.

[NEEDS Updating, Add technical examples, cost calculations…]

Our ignorance is increasing rapidly

In this chapter I want to continue describing some contextual bricks that form the terrain on top of which we need to build, but from a different angle. This is a big one for the medium to long term and may not seem to be relevant for the next few years, however this is an illusion, these challenges will be creeping up really quickly given that they are exponential in nature. Meaning that while today it may be manageable, next year it could be double in size, and the following year, doubling in size again, and then again and again… Discussing this challenge here will help frame the conversation around collective intelligence, education, open innovation and our limited personal capacity to learn everything there is to learn. Here I describe how the amount of knowledge we need to function is increasing exponentially, and inevitably will exceed the capacity of our brains to learn, retain and pass on that knowledge. I’ll share some ways we have overcome this challenge in the past and how we can learn from that to radically increase our capacity to learn in the future.

Source: https://imgur.com/gallery/zgXHFNg

Collective intelligence is an emergent property of certain types of networks, like an ant colony. Here the collective intelligence of the Ant colony does not require the singular ant to understand everything, it just requires the ant to operate within a framework where collective intelligence can emerge. Do we need humans to understand everything? Is that even possible? Can we create such a network and an appropriate framework for us humans, where, while individually we may not understand everything, collectively we do? Do we already have such examples and could we expand on those and increase their capacity?

Let's start by looking at how human networks first started. This has been a personal fascination on mine for many years, and two research books stand out of the many I've read namely, Sapiens by Prof. Yuval Noah Harari, and Blueprint by Nicholas A. Christakis MD PhD. Each of these books, considers our history in extraordinary detail, some of which I want to highlight here: Let’s first consider prehistoric humans, hunter gatherers, traveling in small family groups, roaming the land in search for food and shelter. Back then, within a decent lifetime you could learn all there was to learn about foraging, hunting, food preparation and survival skills. From your perspective you could learn and know all there was to learn and know within a lifetime. This is not unlike the experience of other animals, who within their lifetimes also learn all there is to learn from their perspectives. A lion learns how to hunt and survive by first playing it out while they are young cubs, then later they learn through experience and the environment, by the time their lives end, there is little new they could have learned from their perspective. Sure, they probably did not contemplate the theory of relativity, but they also did not need to in order to catch the gezel drinking at the river's edge. And for about 2.5 million years prehistoric humans lived like this, similar to our animal brothers and sisters, we learned and knew all we needed to and this was the capacity to which our brains evolved to handle. Then 70 thousand years ago, looking at the archaeological record things started to change. Our brains were suddenly in a position where they needed to accomplish much more than they were evolved to handle, and yet we managed this. But How? This could not simply have been evolution. Evolution takes millions of years, and yet somehow we managed to extend our mental capacities within thousands of years.

Before we discuss some of the ways we managed to do this, let’s first put to rest some pseudoscience about the human brain. There is a myth that has been pushed over the past few years, mostly by pseudoscience and overly creative hollywood directors, that humans only use 10% to 15% of our brains. This myth came from studies that sensationalizing journalists misread. When researchers started connecting electroencephalogram (EEG) sensors to the brain and measuring which parts of the brain light up when individuals do certain things, what was noticed, and quite logically, is that at any one point only about 10% to 15% of the brain was active. This was immediately jumped on by these journalists to construe that we only ever used this small percentage of our brains. But what they failed to recognize, probably intentionally, was that over the course of normal brain function, as we do different things we DO use all of our brain. We use different parts of our brains for different things. Some parts are used when reading and others when driving a car, we don't use all of our brain, all at once. It's as if they read that Chefs only use 10% of their ingredients in each meal and came up with the conclusion that chefs were only achieving 10% of their potential. They are essentially saying “Imagine how amazing the chefs food would be if they used 100% of their ingredients…” Can you imagine a mushroom soup that also included coffee, peanut butter, shrimps, mint, olives, watermelon and dark chocolate? We don't have a spare 80% capacity that we just need to figure out how to tap into. Our brains have evolved the capacity to accomplish what they need to accomplish, and for 2.5 million years, it did not need to accomplish much!

But that does not mean we could not hack our brains and find some useful approaches to boost some aspect of it. This is what we started to discover thousands of years ago, we started to discover methods we could use to creatively boost our mental capacity. Similarly to how we discovered we could boost our physical ability by employing the muscle power of other animals to plow our fields.

It looks like that about 70,000 years ago we started to discover ways we could boost our mental capacities, which resulted in the Cognitive revolution. During this time, we invented the cooking of food with fire which released more usable calories, more usable calories would have resulted in more usable time to develop more sophisticated language, cultures and societies. More sophisticated language would have helped more complex ides being generated. I don't pretend to know the full story, and the full story is not relevant here, but a part of it is: Around that time, humans started agriculture, domesticating animals, forming cultures and larger social groups. We also started to specialize, some of us became dedicated hunters, others took care of domesticated animals, and agriculture and yet others moved into healing, child care and governance. Now within a lifetime you could no longer learn and know all there was to learn and know. From here on our perceived ignorance started to increase rapidly. In order to function, we needed to create a basic level of trust, community and knowledge, which could form a framework upon which we could specialize and still retain the ability to communicate and interact with other people who are specialized in quite different areas.

Seventy thousand years ago, when we started building larger communities and started to specialize, it forced humans to become more cooperative. And even though we could not individually learn all there was to learn, we figured out a few tricks, a few mental hacks that even though they did not increase our mental capacity on an individual level, did on a communal level. If we could no longer learn and know all there is to learn and know, perhaps we could share it across many individuals, and create a simple framework within which we could leverage other people's knowledge as if it were our own. We could comfortably operate in an environment where our ignorance increased because we offloaded that ignorance to someone else. That way we could each specialize, and as a collective, a collective intelligence could emerge, even though no one individual knew and directed everything. Now, if I were a farmer, I could give some of my crops to the hunter, and they would give me some of their meat. I no longer needed to know everything there was to know about hunting, I now only needed to know what the hunter wanted (their input), a basic understanding of what they did (the process) and what the hunter provided in return (the output). I hope this input, process, output is reminding you of a common theme here.

lego brick by Lluisa Iborra from the Noun Project

Now this simple hack, of sharing the burden of knowledge among a small community where everyone knows each other and what their inputs and outputs are was successful, but only for small communities. This hack became unfeasible for larger communities, and relates to humans' limited capacity to only be able to maintain stable social relationships with at maximum 150 other people, called the Dunbar’s number. This lack of mental capacity limited the success of these communities, and their collective intelligence. And so for the next 20 thousand years we started to explore ways of hacking our mental shortcomings again when we started building larger communities.

And this is when we discovered another series of powerful hacks, we could create extra-realities that are not bound to what we can see, feel and experience, but rather an extra-reality we simply choose to believe in. A simple contemporary example is our hack to create an extra-reality for currency. Now instead of us carrying bushels of wheat, goats, fish or gold, to purchase a piece of clothing from the mall, we can pay for it with a piece of paper, or more commonly, a string of zero’s and ones, that have no intrinsic value. One of the most successful shared extra-realities we’ve built which allowed for mass cooperation, was religion. Religions allowed for a whole lot of complex structures and agreements to be put in place, ones that were not coded for in our DNA, but rather ones we created. These extra-realities allowed us to hand over even more of our ignorance, no longer did we need to know everyone in our community, we could still cooperate with them knowing which extra-realities they believed in. These extra-realities, had significantly more capacity than our initial hack, that simply offloaded our ignorance to our small community of neighbors who we need to deeply know. Now instead of knowing what the inputs and outputs are of every individual we met, we could hack that requirement and simply learn what the inputs and outputs are of these extra-realities, and then as long as the person we are interacting with subscribed to one or many of the extra-realities we know about we could start to interact and collaborate. We could start to generate a collective intelligence that greatly exceeded the limitations of Dunbar's number, that historically seemed to be one of the factors that limited growth.

Now these extra-realities allowed for an increasingly complex society to be created, the more well defined the shared extra-realites were the more complex and seemingly “successful” the society could become. And all this was done without increasing the capacity of our human brains, beyond incremental improvements that resulted from more healthy living, and more efficient learning practices. We can imagine shared extra-realities being like the bricks we are building here, the more complex and well defined the bricks are, the more complex the structures we can build with them could become. We don't all need to understand what went into the design and building of a lego brick, we just need to understand what the inputs, and outputs are and if it's design fits with our goals.

Source: https://cheezburger.com/8350409984

A side point to consider is that these collective intelligent systems we were starting to build were not just limited to groups of humans. The ability to create shared extra-realities that are beyond what is physically experienced, is something that is quite unique to Humans and may be one of the contributing factors to the cognitive revolution that occurred 70 thousand years ago. Other animals don't have religions as far as we know, they don't have a monetary system where the monetary instruments don't actually have value, like we do, Although there have been some experiments that put this into question. As far as we know humans are the only animal that has accomplished this. But that is not to say other animals were not somehow also connected to this collective intelligence. They too played an important role. A prime example was the wolf, that over those 70 thousand years has become the dogs we love today. They provided our collective intelligence with information humans alone could never have perceived, like the smell of a threat 2 kilometers up wind, the speed and strategy to allow us to herd large flocks of sheep, or the added protection afforded us while we slept. Here too, all that was needed to add them to the collective intelligence was to understand their inputs and outputs. A collective intelligent network could cross species lines, and possibly even political lines… maybe.

So for the past 70 thousand years these hacks allowed us to significantly boost our mental capacity, first by offloading much of what needed to be learned to specialized experts in small communities, and later to large extra-realities within which millions and billions could operate, offloading more and more not only to other humans, but also to ideas -extra-realities- we simply made up and chose to believe in. However, even these extra-realities have their limits. Most are centrally controlled, which seriously limits their ability to scale, grow, mature and keep up with our requirements. When we first discovered Newton's theories of motion and learned that the earth was not the center of everything, it look many hundreds of years for this new “brick” of reality to be included into the extra-realities we subscribed to. Centralized control, while great at providing stability, is really poor at innovating, which I’ll go into more detail on when discussing open versus closed innovation, later in the paper.

Today, many of our extra-realities are starting to reach their capacity. The rate at which new knowledge is generated is increasing exponentially, our bricks of new understanding are rapidly being stacked on previous bricks, and it is becoming increasingly difficult to retain enough of a shared understanding to facilitate strong cooperation. Even within a singular highly specialized domain, the knowledge generated often exceeds the speed and capacity for specialists to learn and retain that information. Take specialized fields like neurology, quantum physics, computer programming and machine learning. Here, if we were to read all the latest findings within that field, by the time we finished reading about them we would have double the amount of new findings to then read about, and once we finish that, we would again have double the previously already doubled amount to read up on. The rate at which new knowledge is generated in many cases is becoming exponential. And yet we are limited by the finite capacity of our minds to learn and process that information, and the linear growth in complexity of our shared extra-realities we are using to effectively communicate and collaborate.

Even if we simply consider what information our kids should learn as a foundation, this too is increasing far quicker than we have the ability to learn. Consider how poorly the current educational system is preparing our kids for the future they will live in. When factory model schools first started, we could fit all our kids of different ages into one classroom and over a few years, teach them all they needed to know to form a foundation on which they could then operate and effectively communicate with others around them. Then as more needed to be learned to have an effective foundation we split them up into age groups and created secondary school. Then when that was no longer sufficient, we created college, then the Master degree, then the PHD, and now in some fields you need several post docs to start out. Now I do think this trend can be slowed down somewhat by offloading some to this foundational knowledge, like needing to memorise random facts and learning to drive a car, both of which are becoming redundant. But, and this is a big BUT the amount we can offload does not compare to the amount to new knowledge our kids should learn. This not only includes life skills, that are critically needed like communication, collaboration, critical thinking, creativity skills but also includes a rapidly growing amount of contemporary topics like how to manage your online persona, navigate the challenges of social media, news and political bias, broader sex and drug education, online privacy, avoiding scams, modern financial literacy excetra. The amount of foundational information needed to be learnt is itself increasing exponentially.

If we extrapolate this phenomenon, the distance between people's understanding and worldviews are only going to increase… A black mirror episode could easily be created on this phenomenon, showing two well meaning kids who grow up within two distinct groups, whos base knowledge and world views differ so profoundly, simply because they now live in a world where there is too much to know, that they both look at each other not as humans that share the same DNA but as something that's alien. We can already think of various example of this happening today.

We need to figure this out, we need to overcome this limitation to allow us to continue progress towards greater understanding, maturity, compassion and love for all humans and all life.

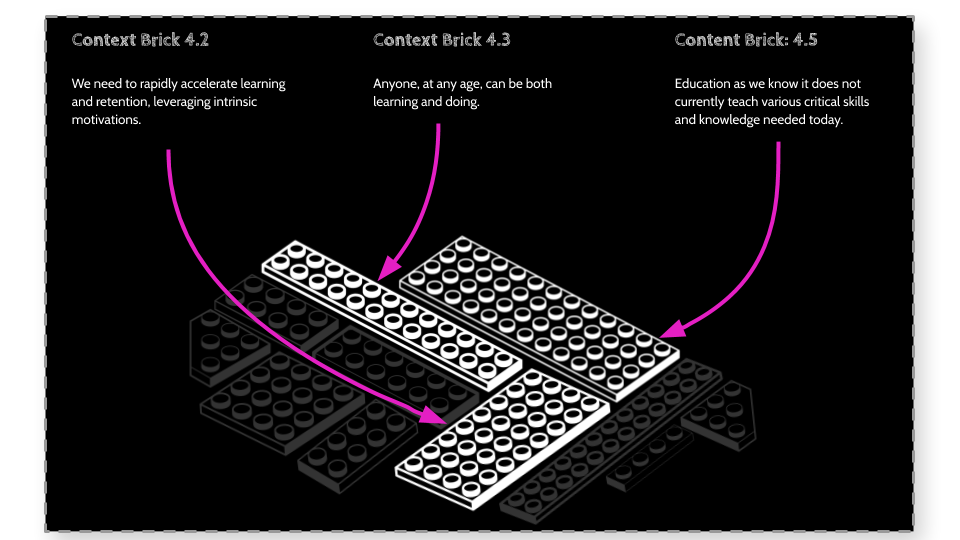

Therefore to conclude this chapter, I’ll describe the next few contextual challenge bricks we will use during our discussions that represent our need to create a scalable framework within which to collaborate, despite our many limitations.

[lego brick by Lluisa Iborra from the Noun Project]

Context Bricks 3 - Two sides of the same coin

Context Bricks 3 - Two sides of the same coin

Two sides of the same coin, Learning and Doing.

First, we learn how to do, then we do what we learned, increasingly these happen years apart, however, we can radically accelerate both learning and doing if they occur in parallel and in real-time.

In the next two chapters, the final two of this context building part of the paper, I’d like to focus the discussion around education -when we learn how to do the things we need to do in adulthood- and then on work -when we do what we learned how to do while in school. It seems that work and education have been designed to follow one another: for 20 years we get educated and learn things, then for 40 years we get to work and do things, followed by 20 years of complaining about the many things we could have learned or done. In reality learning and doing are two sides of the same coin, a coin we could flip between multiple times throughout our lives, even multiple times a day. While the next two chapters focus the discussion on why education and work are ill-designed for collective intelligence, an underlying theme that will be picked up on later is that this two-sided coin, if redesigned, could allow us all to flip between these two sides seamlessly at any stage in life, even multiple times a day. Why can't my 5-year-old daughter, do real work like designing and building an exoskeleton that helps paralyzed people move? Why can't a 60-year-old learn to become a game developer after spending a lifetime as a plumber? Learning and doing are two sides of the same coin, we should not think of them as separate, we should not limit them to certain ages, they are one and the same. They are continuous.

Consider how we learn and do things before and after we leave school. As kids before we go to school, we learn by doing, by experimenting, by playing, by arguing, by exploring. Then after we finish school, and if we are lucky enough to work in an empowering job, how is it that we learn? Well, we learn the same way, for example, when I suddenly needed to implement a new machine-learning algorithm, I learned by doing, by experimenting with examples that others provided, by playing around with other similar solutions, by arguing with my fellow team members, by exploring random projects. The way we learn before and after finishing school follows a similar approach, And yet, why is schooling, the time we are supposed to be learning the most structured so fundamentally different? This structure is a considerable bottleneck and friction that slows down collective intelligence almost to a halt, to time scales years in length. In the next few chapters, we will start to look at ways we can reduce these time scales to hours and minutes, the timescales needed to spark Super Collective Intelligence.

Context Bricks 4 - Education, not setup for collective intelligence

Context Bricks 4 - Education, not setup for collective intelligence

Why is education, the way we learn things, currently not setup for collective intelligence.

Our educational systems are ill-designed to support collective intelligence and support the development of solutions to the context bricks already discussed above. Here I’ll discuss some reasons why, namely the slow speed at which new learning is propagated, the disconnect between learning and doing, and the inefficient way we teach through producing one-size-fits-all pathways. All of which limit the emergence of collective intelligence.

To illustrate, let's first talk about foundational education, this is what we learn in school, colleges, and universities before we enter the workforce. I must admit that I’ve been utterly frustrated with this part of education since I entered into it at the age of 6, However, I’ve ironically seen my career path move closer and closer to this domain, and now I spend most of my time here. This foundational education model has seen very little change since the factory model of education was invented about 150 years ago, at the birth of the industrial revolution. Sure, the content of what is being taught has expanded, and the methods have improved, but the underlying structure of it, the foundation, has remained the same:

Let’s get a bunch of kids, group them by year,

Assume they are similar,

Process them through an assembly line process, removing any imperfections like their differences.

And send them into the factory, that is the world, assuming that the education we designed several years ago is still relevant today.

And only once they finish 20 years of this, will they be accepted in the real-world where “real work” is done.

Now we have probably all heard the same frustrations from hundreds of educators, parents, and kids, but how does this relate to collective intelligence and emergent learning? The first issue is the speed at which new knowledge can propagate through the network. Currently, it takes several years for new knowledge to be incorporated into the network. As an example, when science or business discovers some new concept, it first gets worked on and refined within closed siloes, then limited information is published if we are lucky, and several years later once patents have expired, it gets incorporated into a new curriculum before its more widely propagated. We need this to happen in real-time for super collective intelligence to emerge. New discoveries, methods, science, technology and knowledge should seamlessly be translated to new learning in real-time. Currently, our systems, education and industry are not designed to handle this, but with some small, yet fundamental tweaks, our systems could be structured in such a way that learning and doing are built hand in hand, where work and education are so closely aligned, that their distinctions become almost irrelevant, and we will discuss this later in the paper. But before we go there, let's talk about some other reasons education today is not well designed to foster collective intelligence. Let's talk about learning paths.

Education at its smallest component is about teaching singular granular concepts, one at a time, and as more concepts are learned, stacking them together, as you would with lego bricks, to create more and more complex concepts that can be understood and learned. But we can't just learn a whole bunch of random concepts, these don't stick in our memory, and so over the years we have developed methods to help them stick. As Humans, we battle to retain more than a few random concepts in memory if they are not somehow connected together through context and narrative. Our brains are physically structured in pathways of neurons, called neural pathways. We don't have random access memory like computers do, we have pathway accessed memory. This is why we love stories and relate far better to stories than random facts that have no context or relevance. Even people who have a seemingly photographic memory and can retain random facts, like Ken Jennings, the world champion of random facts, memorizes these facts by creating pathways to them in his mind. If he needs to recall some random fact about Indian history, he travels down an imagined hallway in his mental castle, turns right at the kitchen, opens up the pantry of history, finds the section that relates to India, in this case, the spices section, then goes ahead and searches for the right combination of spices, tastes and smells that then trigger the correct memory of the random fact he needs to recall. Another example is how Daniel Tammet, can recall the first twenty-two thousand numbers of Pi from memory. He does this by converting each number into a color, smell, sound, and feeling, and then when he recalls the number, he simply recalls the "song" that is created by the colors, sounds and feelings. Daniel did not memorize the numbers, he has memorized a personal story, a song that represents Pi. Notice how personal Ken’s and Daniel’s stories are. They would not be able to recall as much as they can if they were to recite someone else's story. The more personally relevant the story, the more we can retain and understand. The more we can retain and understand, the more we can share.Teaching concepts within educational stories, therefore, makes a lot of sense. That is why creating courses that go through a sequence of concepts within a narrative framework, works far better than simply learning random concepts. So we got that part right, we create educational stories, but we did not get the personal part right. Remember, it was Ken and Daniel that created their own story which allowed them to succeed so well, it was not a story created by someone else that they used. Consider how few students get top marks and learn 100% of what there is to learn in the educational stories we produce for them. The stories we present to learners are so generalized that they have little relevant context for the learner to internalize. If you were to test the same students, even top-scoring students, a year after they finished their qualification, most would have forgotten what they have learned, because so very little has been internalized. It's as if we forced Ken Jennings to memorize our version of a castle instead of allowing him to create and use his own, Or that we removed the sounds, smells, feelings from Daniel Tammet's story, and instead got him to memorize just the numbers of PI.

We can think of the educational stories we currently create, like a lego model and the individual concepts being taught in them as lego bricks. But unlike lego that was actually designed to be broken apart, rebuilt, repurposed, redesigned an infinite number of ways, our educational stories have been glued together into impersonal models. We can actually use Lego and the Lego movie as a great analogy here. In the Lego movie, the dad "Lord Business" tries to glue all the Lego pieces together so that they retain their "designed" shape. Lord Business believes that the best way to build Lego models are exactly how they were presented by the "expert" designers at Lego. Now, Lego models were not designed to be used in that way. While it does help to sell bricks by presenting them in suggested models, ultimately Lego wants the bricks to be used to create something personally relevant to the person who plays with it. This actually makes financial sense for them. If a child does create something personal with the bricks, if they recombine them, design and build something personally, as opposed to simply building the pre-designed models once, then the retention and engagement the child has with those lego bricks is orders of magnitude higher than for kids who simply build the model once. If Lego can get a child to personally experience the joy of designing, building and playing with the bricks to create their own personal imagined stories in play, then those memories and feelings are imprinted deeply into the child's memory, this intern increases the lifetime value of that customer (the child) as they continue to purchase lego, for themselves and their kids when they grow up. If however the child simply builds the model once and does not create their own personal connection then, just like most other toys, they are quickly forgotten about and no deeply imprinted connection is made. To make the same revenue in that situation lego would need to create mass-produced build-once disposable-models and hope they sell enough of those to equal the longer lifetime value of more committed customers.

[Source: Lego Movie]

Will Lego focus on impactful deeper personal experiences, or more volume but throwaway experiences? As I see it, Lego has infinite possibilities, but only if you break the mold! Similarly, we have the same choice in education: empower learners to build their own deep and personal learning stories, or continue to mass-produce, one-size-fits-all pathways.

Now there is a lot more we can do to fix education, and I’ve written and spoken about that in detail elsewhere, but because this post is focused on collective intelligence, I will only briefly mention some of these points here that are still relevant:

There is another reason personal learning paths compared to one-size-fits-all paths are so much more valuable and that is that they can tap into the intrinsic motivation of the learner far more easily. In one project I was running in East Africa - You can learn more about it here, we were trying to solve the problem of motivating kids to learn on their own when they don't have access to teachers, schools or even parents that would encourage them to do so. We were building a digital tool that could rapidly be deployed to the hundreds of millions of kids in this situation but we needed to figure out this motivation challenge. We tried game mechanics, providing kids various extrinsic rewards, and while this worked very well for the first few hours and days, their engagement quickly diminished. We needed to find ways we could better tap into their intrinsic motivations, which is when we started to tie intrinsic goals to their learning. Now instead of trying to motivate a child to learn how to read, write and do math, which by themselves had no tangible relevance to any of their intrinsic motivations, we provided them tools to map out how to get to where they wanted to go. Now suddenly their passion to become a Nurse or bicycle mechanic translated into a personal path of learning that was relevant to their goals -I go into detail on how we did this, first in the Learning Map chapter, and later in some of the solution bricks. But for now, the point that is relevant here is that, intrinsic motivation, is a key component to learning, contributing and ultimately the motivation to contribute to a collective intelligence.

Finally, and I won't spend more than one sentence on this point, because so much has already been written about it. Is that education as we know it currently does not empower kids with various skills and knowledge they need today, like creativity, communication, collaboration, critical thinking...

lego brick by Lluisa Iborra from the Noun Project

Context Bricks 5 - Companies, not currently setup for collective intelligence.

Context Bricks 5 - Companies, not currently setup for collective intelligence.

Why are companies, the way we do things, not currently setup for collective intelligence.

Much like our educational models are ill-designed to support collective intelligence, so too are our companies because of how they are structured, grow, innovate and only share the value they generate with a small number of founders and big investors. To describe this, let’s consider companies to be a collection of bricks. Much like we considered educational pathways to be a collection of concept bricks stacked together in a linear path, let’s consider companies to be a collection of functional, process, organizational and technological bricks. that when stacked together create a company. You may have a business model brick, a go-to market brick, and sales strategy brick, a few product bricks and some business operations bricks...

Now in most organizations these bricks are not only glued together, they are welded, bolted and jammed so closely together they are often seen as a whole instead of a stack of bricks. But why is this? Traditionally, when you choose to solve some problem you would start some type of entity, probably a company, where you could then raise some resources, hire some people and then solve whatever is was you wanted to solve. Building such a structure was required because you needed to make sure you could coordinate all the separate pieces of the company to do what they needed to do. You would spend a lot of effort creating a management framework, and a whole lot of processes that tie these separate parts closely together, trying to make the transaction costs [the costs of handing a piece of work from one part to another part of the company] as small as possible. For hundreds of years creating such a structure was the most efficient way to accomplish this, and over these years we have developed robust mechanisms in support of it, like management frameworks, legal structure, process structure and physical structure; explained really well by Clay Shirky in his 2005 TED talk, Institutions vs. Collaboration. However, these robust structures, and a false belief that creating these structures will remain the best way to solve problems, has resulted in them trading the benefits of modularity, for the illusionary efficiency of gluing things together. This calculation may have made sense for many hundreds of years, but no longer, or as Clay Shirky describes it “We have lived in this world where little things are done for love and big things for money. Now we have Wikipedia. Suddenly big things can be done for love.”

There is a critical limitation in traditionally siloed companies and how they innovate: radical innovation generally happens only once within their closed systems, at the beginning of the venture or new product. After that initial radical innovation is implemented, the natural state of the system is to protect that innovation and from that moment focus on optimization. This optimization strategy is a successful approach to the extent that it maximizes the value of that initial innovation for the founders and big investors who retain most of the equity. However, it's not such a successful approach for the vast majority of contributors that actually make the innovation work and own little to none of the equity. Therefore, we see time and time again radical innovation generating a successful company, that then monopolizes that level of innovation, shifts to optimization and extracts value for the few instead of innovating further for the benefit of the many. One of my favorite books that presents research on this in extraordinary detail is The Master Switch by Prof. Tim Wu. Here he describes how some of the most innovative companies of the past 100 years fall into this trap, stifle innovation and get stuck, or as he says “if everything is entrusted to a single mind, its inevitable subjective distortions will distort, if not altogether disable, the innovation process.”

It is this getting stuck that limits the shelf-life of this optimization strategy. We can illustrate this by thinking of innovation as a point on a chart but where we only have limited visibility, a few steps to either direction (see chart). When we develop a successful innovation, we have somehow found an elevation on this chart, but because we don't have a lot of visibility to either side, from that moment onwards, we play it safe and take small incremental steps. We A/B test our product or solution, we run surveys and we focus on safe small steps forward. This is until we find the point on which any further innovation seems to make things less effective. This is a point in mathematics called a local maximum. It's the maximum height of the chart within a small local area.

Once a company reaches this point, it seems that any further innovation makes them less effective, and so they focus on optimization instead. Optimization, in these cases, further glues and solidifies the separate bricks together to make them more “efficient”. And while this optimization phase can still reap a lot more value, eventually there will be diminishing returns, as the resources required to optimize further outweigh the value that optimization will provide. A good example is Education, and Healthcare. On their current local maximums, their focus on optimization is now at a stage that the amount of return of investment per dollar spent on optimization is in many cases negative. Education and healthcare costs in the USA continue to rise, yet outcomes continue to fall. The only hope for more effective solutions is radical innovation. But at that stage, there is no easy way out for these companies, especially if they have glued their bricks together. They must either adopt a disruptive innovation or decide to let the venture run its course and close in bankruptcy after it has lost its value through obsolescence.

Let’s consider a real world example, Kodak. They produced a radical innovation in film based photography, and then spent decades optimizing their innovation. They had an exceptional run on their local maximum extracting a ton of value, however, their business model and shareholders did not allow them to radically innovate any solutions that would threaten what they had built. Even though Kodak were the ones who invented the digital camera, they could not get themselves to disrupt their existing model. Kodak went bankrupt when their market moved off of their local maximum to other innovations that generated far more value. In these companies, the bricks that make their business work are glued together, through internal processes that initially seem more efficient, but in the long term limit innovation. In the Kodak example, their marketing, finance, investments, technology, and product bricks were so closely glued together that it was impossible for them to even experiment with other bricks without causing their whole structure to potentially collapse. Consider what could have happened, if they intentionally kept the bricks that made up their business modular, so that they could easily experiment with replacing some parts with newly developed and updated bricks. They could even have quickly setup completely separate copies of their business replacing a few bricks here and there and creating multiple versions that tested the market. They certainly had the talent and market dominance to do so. Instead a 15 person startup, Instagram, became a successful billion dollar digital photography business with a market cap that now far exceeds Kodak at its peak, while Kodak filed for bankruptcy. Now this is not to say that the optimization strategy, and maximizing efficiency on a local maximum is a bad strategy. After all you would not want some of your governmental services like infrastructure maintenance to continually be disrupted. What I am highlighting here is the challenge that is presented by these traditional structures, and how, if what I described in the first few bricks is accurate and we do need to rapidly innovate and reinvent almost everything we do, then this challenge is one we need to tackle head on.

In the Foundational bricks chapter, when discussing the Doing Map, I describe an approach where if we redesign the way we build companies, products and innovate, we can create a structure that will allow for optimization and a structure that will allow for radical innovation to work in partnership, in a positive sum ecosystem. Imagine a company landing on a local maximum, focusing on optimization while allowing a smaller part of its structure to continue radically innovating, and when feasible merge any innovations into its main operation when it can minimize any disruption. In this positive sum ecosystem companies can enjoy the best of both worlds, however these companies will not look like any of the companies we see today, we may need to come up with a new way of describing them.

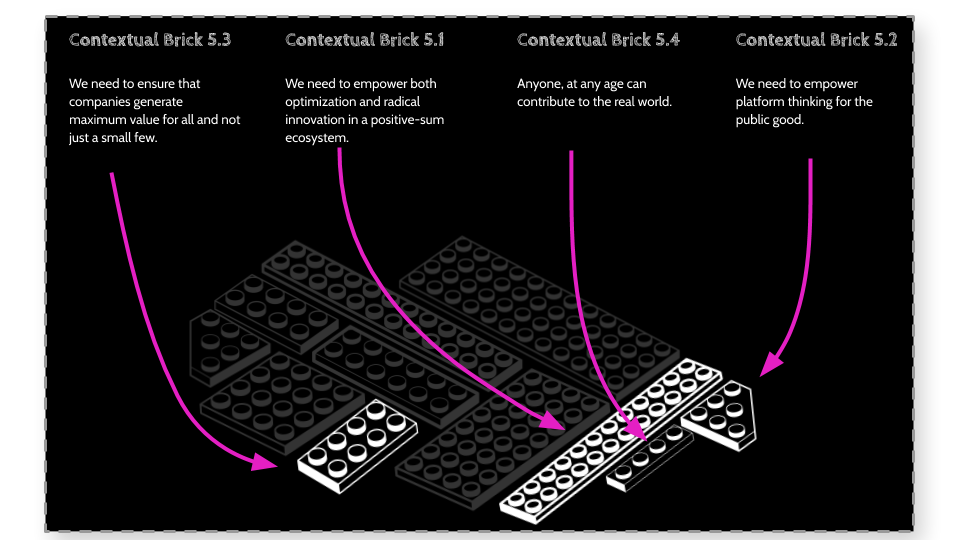

However, before we get there, there are a few more contextual challenge bricks I’d like to discuss.

Platform thinking as a public good

If we consider all the challenges in the world, a majority of them don't get addressed, because there is simply no sustainable business model that would fund the work needed to solve them, called the 80/20 rule, or Pareto principle. But that is only true within the context of our current economic models, something I discuss in a later Innovation market brick. For now I want us to recognize that a large portion of challenges require a different approach to solving them. They require platform thinking as a public good.

As an example, we can compare Microsoft windows and Linux and how the organizations around them operate differently to address the feature and bug fixes their products need. With Microsoft, it is a centralized system that determines which features and bugs to build/fix. This takes the form of a power law distribution where Microsoft focuses on the top 20% of features or bugs, as they would produce 80% of the value. There is hardly ever a business case that would justify them tackling something that is in the bottom 80% where only 20% of a value is expected. However, with an open project like Linux, the community can effectively tackle the other 80% of the features and bugs if they so wish Or as Clay Shirky describes in his Institutions vs. collaboration work: “...This kind of value is unreachable in classical institutional frameworks…” . It is often that Linux gets a contribution from someone, simply because that individual saw a problem they cared about and felt compelled to fix. This person did not need to have a formal relationship with Linux, nor did they need their permission or somehow convince Linux through a business modeling exercise that it would justify the development of that solution according to some 80/20 rule. This self-selecting approach within open systems is highly effective, especially as the contribution then becomes open and available for all to leverage and implement.

Rapid 1000X innovation is possible

Another key reason businesses tend to glue their bricks together is to retain strong centralized control, which allows a small minority to extract maximum value. Within these traditional organizational structures, the value that is generated is generally only shared with a very small proportion of those that contributed to its generation, those that happen to have the most equity in the company. If we want to maximize the rate of innovation, we need to maximize the incentives for all those who contribute not just those at the top.

Consider an alternative. Imagine that a business model brick, marketing strategy brick, various product bricks, goto market bricks and all the other bricks that make up a company were modular and openly available for anyone to leverage in return for a proportional stake in the businesses that use it. Instead of the bricks being developed by siloed teams stuck within a closed business, they could be developed by the best minds from around the world. These best minds, could be working on bricks across the power law distribution described in the previous paragraph. A decentralized group may be working on the perfect marketing strategy brick for some niche use case, another group may be working on a water desalination brick. Here a new venture could rapidly select from a library of bricks, combine them like you would Lego and have a portion of the value generated by the business be equitably shared to all those who contribute to any bricks used. We can even imagine companies being rapidly generated autonomously to accomplish a temporary local need, like a disaster relief effort, dissolving a few days or weeks later when the need dissipates. I will go into detail later of how this is designed to work, but for now just consider the differences between this described open structure and traditional closed structures.

To help us consider this comparison, let's look at a similar example of open innovation in action, using bacteria. Before watching this quick 2 minute video, understand that bacteria share genetic information openly, similar to how I quickly described it in the above paragraph. If one bacteria figures out a solution to a challenge, in this example overcoming antibiotics, they openly share that genetic mutation. The bricks that make up bacteria DNA are not glued together and inaccessible to others, instead they are easily shared and incorporated into other bacteria.

We can think of the bacteria that gets stuck on the outer edges as businesses that get stuck on their local maximum and are limited to only be able to innovate at a linier pace for risk of disrupting their existing business models. On the other hand, the bacteria that is growing towards the center are those that can innovate to achieve 10X, 100X then 1000X the original effectiveness, which is itself an exponential rate of innovation. This is the rate of innovation we need in the real world, and here, in bacteria, is one example of how this rate is possible. Here we see an example of what companies who embrace open innovation can accomplish.

Important side note: Recognize that as we have been speaking about companies, and contributors to those companies, I don't want you to think of the form they take currently. In all of this, I want you to take a step back, come down to first principles and consider not the form we currently have, but the function we need. We need children to learn real-world skills, if they currently feel disillusioned with their education and want to act to make the world a better place, then that function is something we should design any solutions to also fulfill. Why should children not be allowed to contribute to the real world, why should their putting to practice the theory they learn not be immediate.

To end this chapter, let’s distill what's discussed above into some contextual bricks, that we will then build upon.

lego brick by Lluisa Iborra from the Noun Project

Foundational Bricks

Foundational Bricks

The Terrain, reasoning from first principles.

The contextual bricks described above now form the terrain on which we need to build foundations. These are some of the core first principles I’ve considered when formulating what we could potentially do. The challenge is to now forget the Form of other solutions that may already be in place, and focus on the Function that is required to solve a particular challenge. Especially when considering Context brick 1.1, where we discussed that we need to rethink how the world does almost everything we do. If that's really what is needed, then following the form of existing solutions will not work. We need to develop new forms from scratch, choosing to focus on the function rather than believing all we should do is improve the form of what is already available. Our aim should not be to continue building the tower of pisa, but a new tower for the future. And that’s what the foundational bricks now try to do -forget all that came before and ask the question, If we could start from scratch with the benefit of all we know, what could we do?

lego brick by Lluisa Iborra from the Noun Project

Foundational Bricks:

So now that we have discussed the context and created the terrain on top of which any foundation should be built lets start discussing some of the foundational bricks we should place. This is where we will start stacking bricks on top of each other. If you recall, each brick has an input and an output, and the output of one should fit the input of the other for it to be able to be stacked on top of. I’ll continue to use Lego bricks as a simplified analogy, and to illustrate the modularity of what we are doing. Keep in mind that just like I mentioned at the start of the context section, this process we are going through to create these bricks is in itself an example of how collective intelligence can emerge. By now this analogy should feel like something out of the movie Inception, where we are using bricks to build bricks to build bricks…

Foundational Bricks - The Learning Map

Foundational Bricks - The Learning Map

The Learning Map

This learning map brick is designed to be built on top of and provide a foundational solution to the following contextual bricks:

lego brick by Lluisa Iborra from the Noun Project

Important: notice what is not included in the above. There is no mention of having educational pathways designed and run by institutions, there is no mention that these pathways should be taught by anyone in particular, there is no mention that these pathways should be accredited by anyone in particular… Let us intentionally not think about how we solved this previously. We need to stick to first principles. This will help prevent us from starting to think about previous forms (what we already have in place) rather than about function (what are the fundamental needs here).

The back story: